When Tech Breaks Trust

Real-World Consequences: More Than Just Glitches

Bias in AI systems and addictive tech designs aren’t just hypothetical problems—they’re already impacting millions of users every day. From subtly influencing decision-making to encouraging compulsive engagement, these design flaws have triggered serious real-world consequences.

- AI bias leads to unfair treatment in areas like hiring, credit scoring, and law enforcement

- Addiction by design keeps users scrolling, often at the cost of mental health, productivity, and well-being

- Opaque algorithms erode user trust and make it difficult to question or correct system errors

These aren’t edge cases—they’re systemic issues baked into widely used platforms.

Case Studies: When Technology Went Wrong

Facial Recognition & Racial Bias

A widely circulated investigation in 2021 found that facial recognition software misidentified people of color at vastly higher rates. Several jurisdictions banned its use as a result—especially in law enforcement.

Social Media & Youth Mental Health

Multiple studies and whistleblowers have highlighted how algorithm-driven social media platforms amplify harmful content, contributing to increased anxiety, depression, and body image issues in teens.

Recommendation Engines & Extremism

Recommendation features on video and news platforms have been criticized for creating radicalization pipelines—slowly feeding users more extreme content with each click, often without their awareness.

Long-Term Costs: Trust Broken, Accountability Demanded

The fallout from these incidents is far-reaching—not just for users but for tech companies themselves.

- Regulatory crackdowns: Governments worldwide are responding with legislation to increase transparency and oversight

- User backlash: Distrust leads users to abandon platforms, seek alternatives, or demand tougher standards

- Brand damage: Once reputation is lost, it’s difficult—and expensive—to rebuild

Ultimately, trust is a currency platforms can’t afford to devalue. Moving forward, ethical tech design will be a competitive advantage—not just a compliance checkbox.

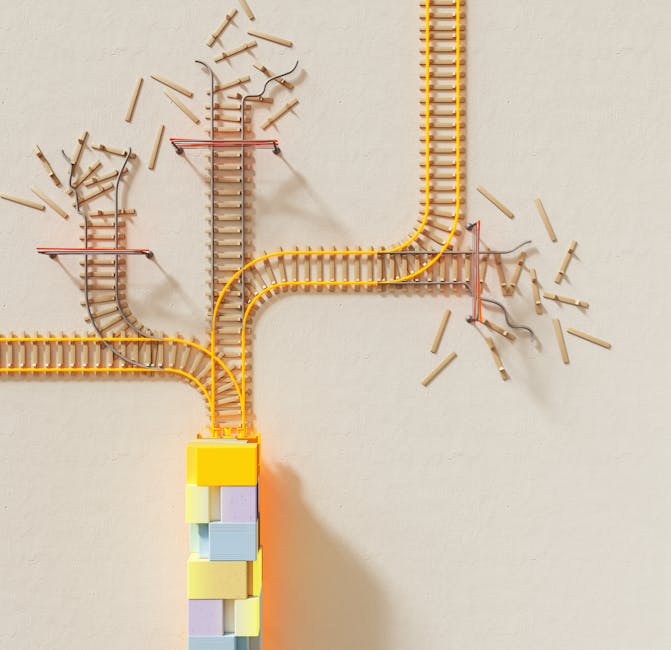

Bridging the Ethical Gap in Algorithm Design

Algorithms Aren’t Neutral

Algorithms may seem impartial, but they are created by humans—and that means they often reflect human biases. These biases can be embedded in the data used to train models or unintentionally reinforced through development choices.

- Biased data leads to biased outcomes

- Assumptions in code can mirror societal inequalities

- Impact is amplified when deployed at scale

Understanding that algorithms are not objective is the first step toward more responsible development.

Common Blind Spots in Development

Even well-meaning development teams face challenges when trying to build equitable systems. Blind spots often stem from a lack of diversity within teams or from not fully testing how systems perform across different user groups.

Frequent Oversights:

- Underrepresentation of marginalized communities in training datasets

- Narrow testing scenarios that miss real-world complexities

- Lack of stakeholder input during system design

These oversights can have serious consequences—from language models that reinforce stereotypes to recommendation engines that exclude entire groups.

The Role of Ethical Design Audits

Ethical design audits give teams an opportunity to catch potential problems early—before deployment. They involve a structured review of both technical and human factors throughout the system lifecycle.

What an Ethical Audit Looks Like:

- Reviewing dataset sources and diversity

- Testing outputs for edge cases and biases

- Including ethicists or outside experts in the review process

- Creating action plans for remediation

Proactive audits can turn ethical risks into design opportunities, helping developers build systems that are not only more inclusive but also more robust.

Ultimately, ethical awareness needs to be built into the culture of artificial intelligence—not just treated as a final checklist item.

Introduction

Vlogging didn’t just survive the chaos of the 2020s—it evolved with it. As platforms surged, fractured, and matured, creators kept pace. Through algorithm shifts, monetization headaches, and audience fatigue, vlogging stayed sticky. Why? Because at its core, vlogging is raw, human, and adaptive—built for change.

Now, 2024 brings a new kind of pressure. The tools are smarter, the audiences pickier, the platforms less forgiving. It’s not just about shooting and posting anymore. It’s about reading trendlines, understanding tech, and delivering value faster than ever. This year, creators who ignore what’s shifting—AI integration, content formats, policy updates—risk fading out. The ones who listen, adapt, and focus? They’ll build stronger brands, tighter communities, and more stable revenue than ever before.

UX with Conscience: Designing for Well-being, Not Just Engagement

In 2024, vlogging isn’t just about keeping eyes on screens—it’s about designing digital spaces that don’t wear people down. Creators and UX teams are starting to ask the right questions: Does this interface make people feel good, or just keep them addicted? Are we building community, or chasing dopamine loops?

The shift is subtle but significant. Timed breaks, comment filtering that actually works, and smarter notifications are no longer optional—they’re becoming expected. The platforms prioritizing healthy experiences are seeing longer-term loyalty. Vloggers who bake mindfulness into their flow—like setting posting boundaries, building in offline prompts, or promoting viewer self-awareness—are earning more than views. They’re building trust.

Same goes for accessibility. Designing for screen readers, providing captions, ditching low-contrast overlays—this isn’t “nice to have.” It’s basic. Inclusive design reduces friction, opens up your channel to tens of millions more users, and happens to be favored by algorithms that now measure interaction quality, not just quantity.

Bottom line: designing with intention isn’t just ethical. It multiplies your reach and deepens your impact. It’s not about lowering excitement—it’s about raising the standard.

Trust Is Now a Competitive Advantage

Audiences aren’t just watching—they’re paying attention. Creators who are open about how they use AI, how they protect user data, and where their revenue comes from are getting the edge. In a media landscape shaped by ad fatigue, misinformation, and algorithm tweaks, transparency has become currency.

People want control. They want unsubscribe buttons that actually unsubscribe. They want to know why an app requests access to their mic or camera. Creators who get this—and who make ethical choices clear and visible—are earning long-term loyalty over short-term views.

It’s not just the fans who are noticing. Investors are backing creators who understand digital responsibility. Regulators are adding pressure too, especially in places like the EU and California. For vloggers, that means privacy policies, clear disclosures, and tight control over email lists and analytics aren’t optional anymore—they’re part of the business model.

Bottom line: trust helps you stand out, now more than ever.

Ethics at Every Stage of the Product Lifecycle

Bringing ethics into product development is more than a one-time decision—it’s a continuous, intentional practice that should be embedded throughout the entire lifecycle of a product. From conception to deployment and beyond, ethical thinking must be part of the process.

Embedding Ethics Across the Lifecycle

Every stage of product creation carries ethical considerations. Integrating them early and consistently leads to more responsible innovation:

- Ideation & Research: Question assumptions. Who benefits? Who could be harmed? Ask diverse voices for input early on.

- Design & Prototyping: Prioritize accessibility, fairness, and transparency. Avoid dark patterns and misleading UX choices.

- Development & Testing: Implement testing for bias, privacy, and potential misuse. Include edge cases and underrepresented users.

- Launch & Marketing: Be honest about what the product can—and can’t—do. Avoid overstated claims.

- Post-Launch: Monitor for unintended consequences. Set up channels for user feedback and be ready to iterate responsibly.

What Teams Really Need

To make ethics actionable, teams require more than good intentions. They need structure and support:

- Training: Regular education on ethics in tech, bias in data, accessibility standards, and inclusive design.

- Challenge: Create space for critical voices and dissent. Encourage team members to ask tough questions.

- Accountability: Assign clear ownership for ethical outcomes. Build checkpoints into project timelines.

Final Thought: Progress Over Perfection

Ethical product development isn’t about always getting it right—it’s about staying clear, responsible, and willing to adapt. It’s a mindset, not a milestone. As teams prioritize ethics at each stage, the goal is to stay open, transparent, and committed to doing better—even when it’s difficult.

Why ethical thinking should be baked into your sprint, not bolted on

Let’s cut to it: ethical design isn’t a last-minute checklist. It’s something that needs to start before the first commit, not after the MVP ships. When developers wait until the end of a sprint—or worse, post-release—to ask, “Is this responsible?” it’s already too late. The real cost shows up later: unintended harm, user mistrust, compliance issues, broken communities.

Instead, bake ethical questions into the sprint rhythm. As you plan features, ask: Who does this leave out? What data are we capturing—and why? Could this be misused a year from now? These are lightweight questions with heavyweight consequences.

There are tools that help. Think Mozilla’s Responsible Computing Framework, the Ethical OS Toolkit, or even simple tools like the Five Whys—applied not just to bugs, but impact. Good teams don’t treat these as blockers. They treat them like they do linting or CI checks—just part of the build.

And here’s the kicker: development isn’t just code, it’s culture. How teams write, review, and prioritize says a lot about what they stand for. Building fast is important. But building with eyes open? That’s how you make software that lasts.

For developers who are looking ahead: What Top Developers Predict for Software in 2025.

Lead Software Strategist

Lead Software Strategist