Voice Commands Now Syncing Across Devices

Voice technology is stepping into a new era—one where commands can be recognized and executed across multiple devices without interruption. This evolution is making the user experience more streamlined, intuitive, and device-agnostic.

From Fragmented to Fluid

For years, voice assistants were locked within individual ecosystems. Now, that’s changing. Voice commands are increasingly synced across platforms and devices, bridging the gap between phones, tablets, smart speakers, and TVs.

- Start a voice-driven task on your smartphone and finish it hands-free on your smart speaker

- Ask your tablet to add a reminder, and it will sync immediately with your smartwatch

- Pause a video on your TV with a voice command, then resume it from the same point on your mobile device

Seamless Experiences for Real Life

What does this mean in everyday context? Users can now:

- Move between environments without breaking flow

- Use whichever device is closest or most convenient

- Expect consistent results across apps and hardware

This fluidity reduces friction and helps voice interactions feel more like natural conversations than isolated commands.

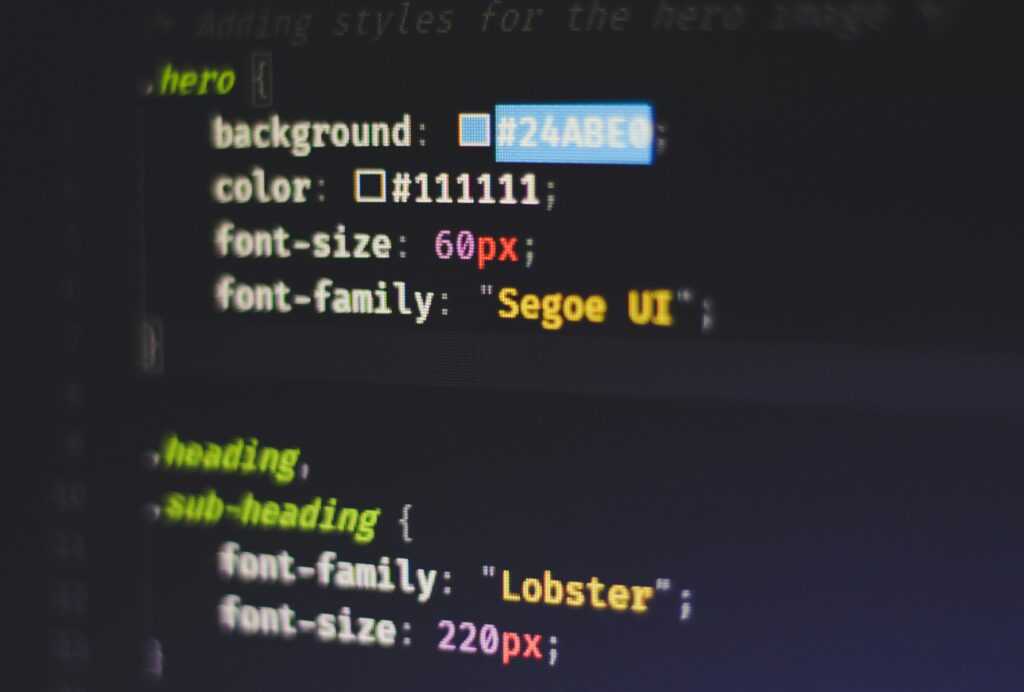

Implications for UX and App Design

For designers and developers, the rise in cross-device voice syncing means new considerations:

- Continuity: Apps must support session persistence and state handoff across devices

- Context awareness: Voice assistants should understand user intent based on recent interactions, regardless of the originating device

- Interface simplification: As more actions are taken via voice, UI elements can shift to prioritize follow-up intents and confirmations

Cross-device voice syncing isn’t just a novelty—it’s a fundamental shift in how users expect to interact with technology. Creators and developers who lean into this trend will set a new bar for convenience and immersion.

Voice tech used to be a nice-to-have. Now it’s becoming a standard. We’ve gone from clunky, easily confused assistants to seamless voice interfaces that actually understand what we mean—not just what we say. The leap in quality is no accident. Smarter natural language processing (NLP), sharper AI models, and tight hardware-software integration have all turned voice from gimmick to go-to.

In 2024, voice is officially sitting at the table with touch and type. Whether it’s voice navigation in apps, hands-free video editing commands, or even real-time language translation while vlogging, creators are starting to lean on it. It’s about speed and accessibility. You don’t need to put down your camera to send a message anymore.

AI is the muscle behind the shift, but the brains come from how it’s paired with better mics, faster processors, and refined training data. The result? Voice tech that feels less like dictation and more like conversation. And for vloggers, that means less friction between the idea and the upload.

Voice assistants aren’t just reading the weather or setting timers anymore—they’re starting to understand intent. Thanks to major AI upgrades, tools like Alexa, Siri, and Google Assistant are learning how users think, talk, and act. They’re pulling in context from past interactions, routines, and even nearby devices to tailor responses that feel less robotic and more human.

Ask your assistant to play music during dinner, and it remembers your usual playlist. Say, “Turn the lights down” while watching Netflix, and it dims the bulbs to your regular evening level. These systems are moving past command-and-response and into relationship-building mode.

It’s not just something you access through your phone, either. Voice user interfaces are everywhere—cars, fridges, thermostats, office lobbies. The result? We’re not tapping screens as much. We’re speaking. Creators, brands, and developers are rethinking how content and services fit into those quiet voice exchanges—all powered by AI that’s smarter, but still learning fast.

Voice Tech Becomes More Inclusive—By Design

For a long time, voice tech was built for the average user—able-bodied, native English speaker, generic preferences. That’s changing fast. In 2024, voice-first technology is leaning into accessibility and nuance, and it’s opening the door wide for people who’ve been sidelined in the digital conversation.

One of the biggest breakthroughs? Full-screen control isn’t necessary anymore. Voice assistants are getting better at allowing users with visual or motor impairments to navigate devices independently. Whether it’s controlling a camera hands-free or managing vlog edits through voice-only prompts, the barrier to entry is lower than ever.

Multilingual support is also stepping up. Speech recognition systems are adapting across regional dialects, accented English, and code-switching in real-time. For creators in global markets, this means audio commands that work how they talk—not how a Silicon Valley engineer thinks they should.

And there’s an ethical shift happening too. Brands are realizing that everything from tone to the gender of virtual assistants affects how users feel represented—or alienated. Vlogging tools with customizable voice interfaces, neutral tone defaults, or even anonymous AI voices are becoming more common. It’s not just about making the tech smarter. It’s about making it more human.

Always-On Mics: Are Users Really Protected?

Smart devices—from phones and wearables to home assistants—are listening more than ever. With always-on microphones built into everyday tech, privacy concerns continue to grow. Users are asking an essential question: How protected are we really?

What Are Always-On Microphones?

Always-on mics enable devices to hear trigger words like “Hey Siri” or “OK Google” even when not actively in use. While they offer convenience, they also raise questions:

- Are these devices listening all the time?

- What data do they collect—and where is it stored?

- Can conversations be recorded without consent?

Advances in Voice Biometric Technology

Modern voice recognition isn’t just about triggering commands—it’s becoming part of user authentication. New developments in voice fingerprinting and biometrics are changing how devices identify and interact with us:

- Voice fingerprinting: creating unique acoustic profiles for individuals

- Biometric authentication: using voice as a secure, hands-free login method

- Adaptive learning: improving over time for better accuracy and fewer false identifications

While powerful, these technologies come with a need for tight security protocols and user control.

What Are Companies Doing About Privacy?

Tech companies are under pressure to increase transparency and limit risks. In response, many are rolling out measures to improve trust:

- User control panels: Tools that let individuals view, delete, or manage recorded voice data

- On-device processing: Voice data is handled locally rather than shipped to the cloud

- Visual indicators: LED lights or notifications show when a mic is active

- Privacy-first design: Devices that only start listening after a trigger word is detected

Despite these efforts, skepticism remains. For creators and everyday users alike, understanding the capabilities of always-on mics—and being aware of consent and control options—is more important than ever.

Voice Tech Gains Traction in the Enterprise

Voice technology isn’t just sitting in your smartphone or smart speaker anymore—it’s showing up at the office. From automatic meeting transcriptions to AI-powered assistants managing workflows, voice interfaces are quietly becoming part of daily routines in enterprise settings. Sales reps talk directly to CRMs. Project managers summarize calls without typing. Internal documentation happens on the fly.

Industries like healthcare, logistics, and sales are leaning in fast. Why? Hands-free means less context-switching and more focus. For workers juggling screens, tools, and schedules, voice is helping cut friction. It’s not about replacing people—it’s about making them faster.

As remote and hybrid work models mature, voice is stepping in as a key UI in this post-screen world. The big shift? Voice is no longer just convenient. It’s strategic.

Emerging Tech: Emotion Detection, Real-Time Translation, and Quantum Hints

The line between vlogging and tech is getting blurrier—fast. In 2024, vloggers are tapping into next-gen tools that once felt like sci-fi. Emotion detection is here now, helping creators understand real-time audience reactions through facial cues and voice inflections. It’s already shaping how content is edited—and even when it’s published. Real-time translation is also leveling the playing field, turning local creators into global voices with a few clicks.

Then there’s conversational commerce, where viewers can shop directly through interactive video prompts. Influencer deals get tighter, sales funnels get shorter, and content doubles as storefront.

But all this functionality comes with a caveat. Machine learning models are inching closer to human-level nuance—mimicking tone, behavior, even humor. Cool? Sure. But also murky. Questions around authenticity, data privacy, and deepfake abuse are no longer theoretical.

And yes, this all plugs into bigger tech waves. Quantum computing isn’t mainstream yet, but it’s influencing how we think about data processing on a foundational level. Expect crossover in modeling, analytics, and generative tools by decade’s end.

(For a broader view, check out The Future of Quantum Computing: What to Expect by 2030)

Voice is No Longer Experimental—It’s Essential

The days of voice as a novelty are gone. In 2024, it’s becoming core to how audiences interact with content—especially in vlogging. Whether it’s voice commands for playback or creators using voice clones to streamline editing, audio-based tools are showing up at every stage of the workflow.

But with convenience comes complexity. As voice interfaces become more intuitive, the need for responsible design grows. It’s not just about speed—it’s about trust. Viewers want to know when a voice is real. Creators want their tone preserved, not flattened by generic AI filters. There’s a fine line between assistive and artificial, and it’s easy to overstep.

So what now? Developers need to build guardrails. Brands should think twice before over-automating. And creators must stay intentional—using voice tech to elevate storytelling, not cut corners. In the end, voice will shape the future of vlogging not just because it can, but because it has to make sense for everyone involved.

Lead Software Strategist

Lead Software Strategist